Kaum ein Altcoin wurde mit so viel Gedöns und Begeisterung verkündet wie Ethereum. Aber was ist dran an dem System, das, so die Medien, “alles” dezentralisieren.

ethereum – Google Blogsuche

I’m Gavin Wood, a co-founder of Ethereum and, along with Vitalik Buterin and Jeffrey Wilcke, one of the three directors of the Eth Dev, the NFP organisation that is managing the development (under contract from Ethereum Suisse) of the Ethereum blockchain. This is a small update to let you all know what has been going on recently.

I sit here on an immaculate couch that has been zapped forward in time from the 1960s. It is in the room that will become the chillout & wind-down room of the heart of the (C++) Ethereum development operation. Surrounding me is Alex Leverington on a Bond villain’s easy chair, and Aeron Buchanan stuck behind a locker that looks as though it was an original prop from M*A*S*H. Lighting equipment from a Soviet Blade Runner, now forgotten except in Berlin’s coolest districts where renaissance chemistry and 60s luxury breath Frankensteinesque life into it, provides an unyielding glow to the work in progress. There is still much to be done here (I feel a little like I’m on the set of Challenge Anneka) but it is undeniably taking shape. This is thanks mostly to our own Anneka Rice, Sarah O’Neill, who is working around the clock to get this place ready for ÐΞVcon-0, our first developer symposium. Helping her, similarly around the clock, is the inimitable Roland, a hardened international interior outfitter whose memoirs I can’t wait to read.

On a personal note, I must say these last few months have been some of the busiest of my life. I spent the last couple of weeks between Switzerland and the UK, visiting Stephan, Ian and Louis. Despite the draws of the north of England, it’s nice to be back in Berlin; the combination of great burgers and cocktails, beautiful surroundings and nice people makes it difficult to leave. Awesome C++ coders are welcome to take that as a hint: we’re still hiring (-:

Technicals

During the last couple of weeks we’ve made a number of important revisions to the protocol, mostly provisions for creating light-client ÐApp nodes. There will be a directors’ post in due course detailing these, but suffice it to say we are as committed as ever that the Ethereum blockchain make possible the massively multi-user decentralised applications for all sizes of devices. The seventh in our proof-of-concept series is awaiting imminent release and the final in the series, PoC-8 will be starting development shortly.

Fresh meat

As time goes on, our team moves from strength to strength. I’m pleased to announce that Dr. Sven Ehlert has joined us. He will be leading development operations; cleaning up the build process, making the build as robust as possible, assisting Caktux in our CI systems and, most importantly, helping architect a stress-testing harness in which we’ll be simulating a series of extreme situations, measuring and analysing. He’s also a scrum aficionado and will be helping us streamline some of our development processes as our team grows.

It is with great pleasure I can also announce that Dr. Jutta Steiner will also be working closely with us in the capacity of managing our security audit. As well as being an enthusiastic ÐApp-developer, she comes with an excellent track record of handling projects and a superb understanding of not only this cutting edge technology but also the human processes that must go on behind it.

I must also shout out to Dr. Andreas Lubbe; though a long-time member of the Berlin Ethereum community and having worked on Ethereum-related code (a notable devotee of node.js), we have recently started working much more closely together on the secure Ethereum contract documentation (SECDoc) framework and the associated natural language specification format, NatSpec. I look forward to some great collaboration.

Aside from Lefteris, who began his first official day with us today (working with Christian on Solidity, and more specifically on the SECDoc and NatSpec portions of it), we have two new developers joining us: Yann Levreau and Arkadiy Paronyan. Yann, a recent arrival in Berlin from his native France will be joined by Arkadiy who is travelling all the way from Moscow to become part of the team. Both have substantial experience in C++ and related technologies and will be helping us flesh out the developer tools and in particular pave the way to the IDE vision.

Finally, I’m happy to report that Christoph Jentzsch, though originally joining us for only 2 months (while taking time out from his doctoral studies), will be joining the project full time in the new year and continuing his much appreciated work on our tests and the general C++ health and robustness.

ÐΞVcon-0

As time rushes by, Sarah, Roland and their team rush even more to finish our hub. For they know that come Monday the 24th, Berlin will have some new arrivals. Developers and collaborators from around the globe will descend on 37a Waldemarstraße, Berlin 10999 for a week of getting everybody on the same page. It is DEVcon-0: ethereum’s first developer symposium.

Conceived by myself and Jeff on a sleepy train from Zug to Zurich as a means of getting the Amsterdam/Go guys on the same page as the Berlin/C++ guys it has evolved into a showcase, set of seminars and workshops of all of ÐΞV, our technologies, our personnel and some of our close collaborators. It is a chance for us each to build lasting professional relationships and bond in what will become a project that may if not define, certainly form a hallmark, on our professional lifes.

Our hub will play host to around 40 people, the vast majority of which are accomplished technical minds that Jeff, myself or Vitalik has at one point or another mentioned, and for the period of a week we will be chatting, mingling and sharing our ideas, hopes and dreams for everything blockchain, decentralised and disruption related. It’s going to be awesome: look out for the videos!

Ever closer

Aside from the continuing work towards starting PoC-8 and the alpha series, I’m glad to report that the Solidity project storms onwards under the stewardship of Christian: the first contracts compiled with Solidity have been delivered and tested working on the testnet, and as I write this I see another Pull Request for state mappings. Great stuff.

Alex has also been working tirelessly on our crypto code and is now beginning work on the p2p layer, the full strategy for which we’ll be seeing in his address at ÐΞVcon. Marek and Marian have also been busy on the Javascript API, and I can assure any Javascript ÐApp developers that they will have a lot to look forward to in PoC-8.

Summing Up

There are also a few other developments and personnel I’d love to announce, but I fear, once again, it will have to wait until next time. Watch this space for a post-ÐΞVcon update!

Gav.

The post Gav’s Ethereum ÐΞV Update III appeared first on ethereum blog.

After the great success of the last Ethereum Introductory Workshop, round two is coming up! During the first part of the event, there will be an open coding.

ethereum – Google Blogsuche

Ethereum erfährt derzeit eine enorme Aufmerksamkeit in der Krypto-Cummunity. Dies liegt vor allem daran, dass hinter der Entwicklung das "Krypto-Wunderkind" Vitalik Buterin steht. Der 19jährige gilt als einer der klügsten …

ethereum – Google Blogsuche

Special thanks to Vlad Zamfir for much of the thinking behind multi-chain cryptoeconomic paradigms

First off, a history lesson. In October 2013, when I was visiting Israel as part of my trip around the Bitcoin world, I came to know the core teams behind the colored coins and Mastercoin projects. Once I properly understood Mastercoin and its potential, I was immediately drawn in by the sheer power of the protocol; however, I disliked the fact that the protocol was designed as a disparate ensemble of “features”, providing a subtantial amount of functionality for people to use, but offering no freedom to escape out of that box. Seeking to improve Mastercoin’s potential, I came up with a draft proposal for something called “ultimate scripting” – a general-purpose stack-based programming language that Mastercoin could include to allow two parties to make a contract on an arbitrary mathematical formula. The scheme would generalize savings wallets, contracts for difference, many kinds of gambling, among other features. It was still quite limited, allowing only three stages (open, fill, resolve) and no internal memory and being limited to 2 parties per contract, but it was the first true seed of the Ethereum idea.

I submitted the proposal to the Mastercoin team. They were impressed, but elected not to adopt it too quickly out of a desire to be slow and conservative; a philosophy which the project keeps to to this day and which David Johnston mentioned at the recent Tel Aviv conference as Mastercoin’s primary differentiating feature. Thus, I decided to go out on my own and simply build the thing myself. Over the next three weeks I created the original Ethereum whitepaper (unfortunately now gone, but a still very early version exists here). The basic building blocks were all there, except the progamming language was register-based instead of stack-based, and, because I was/am not skilled enough in p2p networking to build an independent blockchain client from scratch, it was to be built as a meta-protocol on top of Primecoin – not Bitcoin, because I wanted to satisfy the concerns of Bitcoin developers who were angry at meta-protocols bloating the blockchain with extra data.

Once competent developers like Gavin Wood and Jeffrey Wilcke, who did not share my deficiencies in ability to write p2p networking code, joined the project, and once enough people were excited that I saw there would be money to hire more, I made the decision to immediately move to an independent blockchain. The reasoning for this choice I described in my whitepaper in early January:

The advantage of a metacoin protocol is that it can allow for more advanced transaction types, including custom currencies, decentralized exchange, derivatives, etc, that are impossible on top of Bitcoin itself. However, metacoins on top of Bitcoin have one major flaw: simplified payment verification, already difficult with colored coins, is outright impossible on a metacoin. The reason is that while one can use SPV to determine that there is a transaction sending 30 metacoins to address X, that by itself does not mean that address X has 30 metacoins; what if the sender of the transaction did not have 30 metacoins to start with and so the transaction is invalid? Finding out any part of the current state essentially requires scanning through all transactions going back to the metacoin’s original launch to figure out which transactions are valid and which ones are not. This makes it impossible to have a truly secure client without downloading the entire 12 GB Bitcoin blockchain.

Essentially, metacoins don’t work for light clients, making them rather insecure for smartphones, users with old computers, internet-of-things devices, and once the blockchain scales enough for desktop users as well. Ethereum’s independent blockchain, on the other hand, is specifically designed with a highly advanced light client protocol; unlike with meta-protocols, contracts on top of Ethereum inherit the Ethereum blockchain’s light client-friendliness properties fully. Finally, long after that, I realized that by making an independent blockchain allows us to experiment with stronger versions of GHOST-style protocols, safely knocking down the block time to 12 seconds.

So what’s the point of this story? Essentially, had history been different, we easily could have gone the route of being “on top of Bitcoin” right from day one (in fact, we still could make that pivot if desired), but solid technical reasons existed then why we deemed it better to build an independent blockchain, and these reasons still exist, in pretty much exactly the same form, today.

Since a number of readers were expecting a response to how Ethereum as an independent blockchain would be useful even in the face of the recent announcement of a metacoin based on Ethereum technology, this is it. Scalability. If you use a metacoin on BTC, you gain the benefit of having easier back-and-forth interaction with the Bitcoin blockchain, but if you create an independent chain then you have the ability to achieve much stronger guarantees of security particularly for weak devices. There are certainly applications for which a higher degree of connectivity with BTC is important ; for these cases a metacoin would certainly be superior (although note that even an independent blockchain can interact with BTC pretty well using basically the same technology that we’ll describe in the rest of this blog post). Thus, on the whole, it will certainly help the ecosystem if the same standardized EVM is available across all platforms.

Beyond 1.0

However, in the long term, even light clients are an ugly solution. If we truly expect cryptoeconomic platforms to become a base layer for a very large amount of global infrastructure, then there may well end up being so many crypto-transactions altogether that no computer, except maybe a few very large server farms run by the likes of Google and Amazon, is powerful enough to process all of them. Thus, we need to break the fundamental barrier of cryptocurrency: that there need to exist nodes that process every transaction. Breaking that barrier is what gets a cryptoeconomic platform’s database from being merely massively replicated to being truly distributed. However, breaking the barrier is hard, particularly if you still want to maintain the requirement that all of the different parts of the ecosystem should reinforce each other’s security.

To achieve the goal, there are three major strategies:

- Building protocols on top of Ethereum that use Ethereum only as an auditing-backend-of-last-resort, conserving transaction fees.

- Turning the blockchain into something much closer to a high-dimensional interlinking mesh with all parts of the database reinforcing each other over time.

- Going back to a model of one-protocol (or one service)-per-chain, and coming up with mechanisms for the chains to (1) interact, and (2) share consensus strength.

Of these strategies, note that only (1) is ultimately compatible with keeping the blockchain in a form anything close to what the Bitcoin and Ethereum protocols support today. (2) requires a massive redesign of the fundamental infrastructure, and (3) requires the creation of thousands of chains, and for fragility mitigation purposes the optimal approach will be to use thousands of currencies (to reduce the complexity on the user side, we can use stable-coins to essentially create a common cross-chain currency standard, and any slight swings in the stable-coins on the user side would be interpreted in the UI as interest or demurrage so the user only needs to keep track of one unit of account).

We already discussed (1) and (2) in previous blog posts, and so today we will provide an introduction to some of the principles involved in (3).

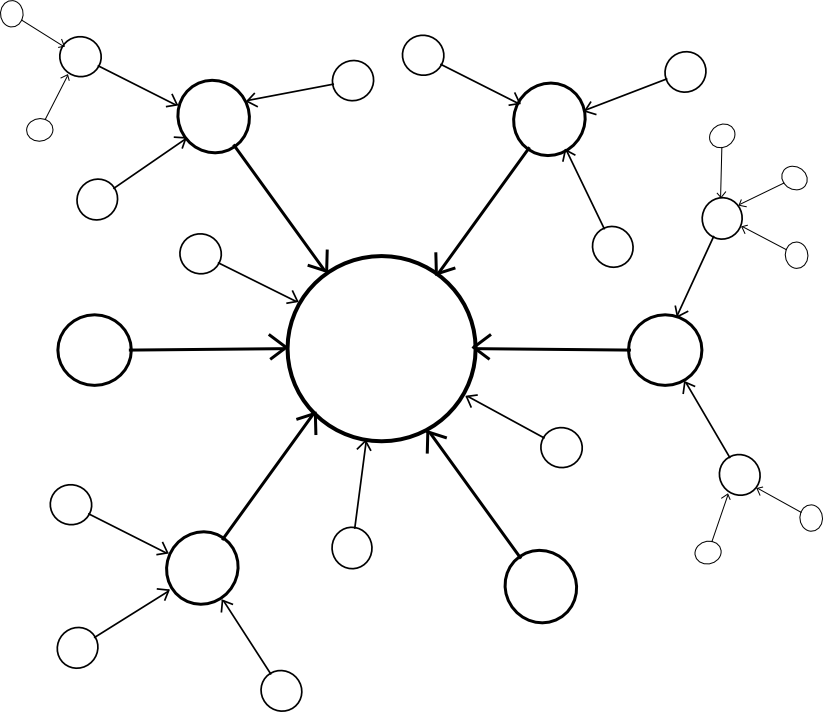

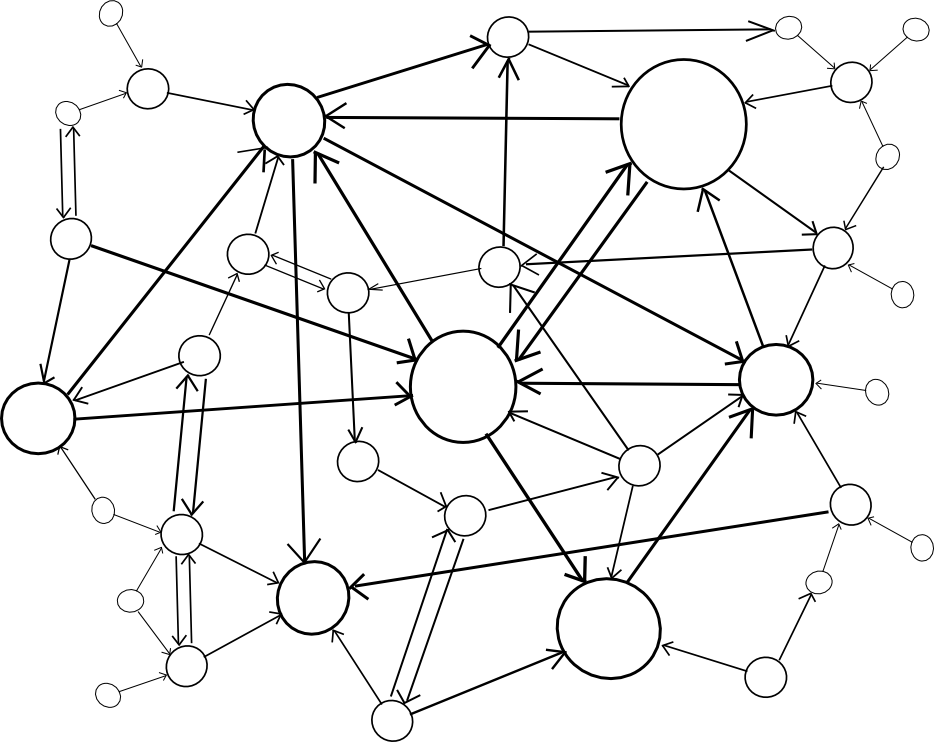

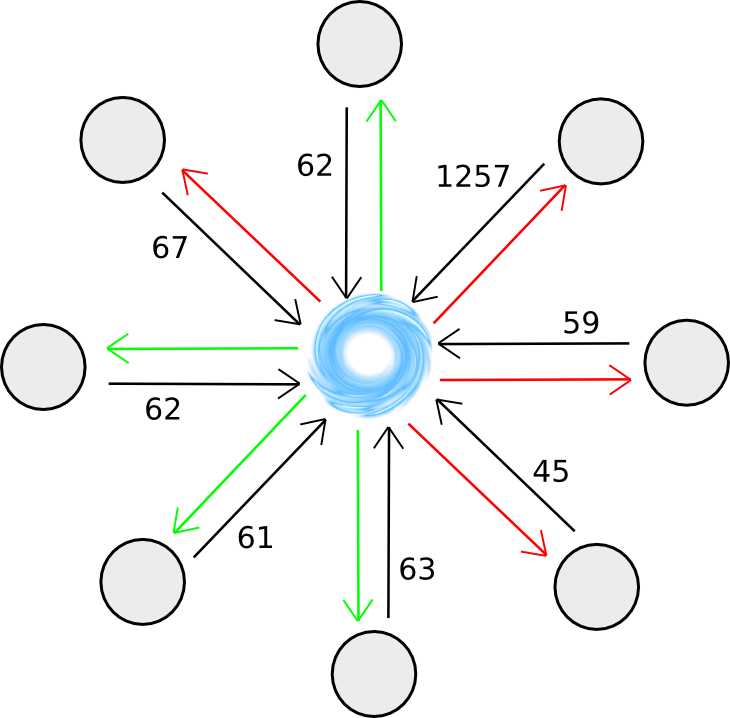

Multichain

The model here is in many ways similar to the Bitshares model, except that we do not assume that DPOS (or any other POS) will be secure for arbitrarily small chains. Rather, seeing the general strong parallels between cryptoeconomics and institutions in wider society, particularly legal systems, we note that there exists a large body of shareholder law protecting minority stakeholders in real-world companies against the equivalent of a 51% attack (namely, 51% of shareholders voting to pay 100% of funds to themselves), and so we try to replicate the same system here by having every chain, to some degree, “police” every other chain either directly or indirectly through an interlinking transitive graph. The kind of policing required is simple – policing aganist double-spends and censorship attacks from local majority coalitions, and so the relevant guard mechanisms can be implemented entirely in code.

However, before we get to the hard problem of inter-chain security, let us first discuss what actually turns out to be a much easier problem: inter-chain interaction. What do we mean by multiple chains “interacting”? Formally, the phrase can mean one of two things:

- Internal entities (ie. scripts, contracts) in chain A are able to securely learn facts about the state of chain B (information transfer)

- It is possible to create a pair of transactions, T in A and T’ in B, such that either both T and T’ get confirmed or neither do (atomic transactions)

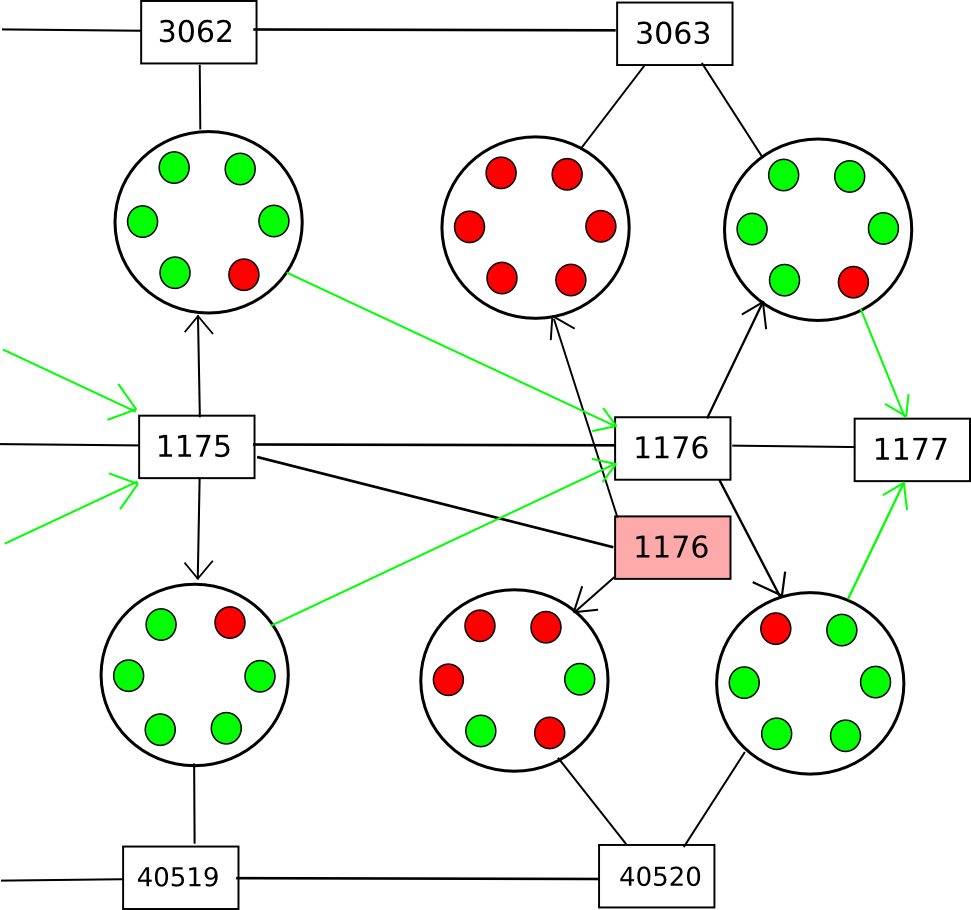

A sufficiently general implementation of (1) implies (2), since “T’ was (or was not) confirmed in B” is a fact about the state of chain B. The simplest way to do this is via Merkle trees, described in more detail here and here; essentially Merkle trees allow the entire state of a blockchain to be hashed into the block header in such a way that one can come up with a “proof” that a particular value is at a particular position in the tree that is only logarithmic in size in the entire state (ie. at most a few kilobytes long). The general idea is that contracts in one chain validate these Merkle tree proofs of contracts in the other chain.

A challenge that is greater for some consensus algorithms than others is, how does the contract in a chain validate the actual blocks in another chain? Essentially, what you end up having is a contract acting as a fully-fledged “light client” for the other chain, processing blocks in that chain and probabilistically verifying transactions (and keeping track of challenges) to ensure security. For this mechanism to be viable, at least some quantity of proof of work must exist on each block, so that it is not possible to cheaply produce many blocks for which it is hard to determine that they are invalid; as a general rule, the work required by the blockmaker to produce a block should exceed the cost to the entire network combined of rejecting it.

Additionally, we should note that contracts are stupid; they are not capable of looking at reputation, social consensus or any other such “fuzzy” metrics of whether or not a given blockchain is valid; hence, purely “subjective” Ripple-style consensus will be difficult to make work in a multi-chain setting. Bitcoin’s proof of work is (fully in theory, mostly in practice) “objective”: there is a precise definition of what the current state is (namely, the state reached by processing the chain with the longest proof of work), and any node in the world, seeing the collection of all available blocks, will come to the same conclusion on which chain (and therefore which state) is correct. Proof-of-stake systems, contrary to what many cryptocurrency developers think, can be secure, but need to be “weakly subjective” – that is, nodes that were online at least once every N days since the chain’s inception will necessarily converge on the same conclusion, but long-dormant nodes and new nodes need a hash as an initial pointer. This is needed to prevent certain classes of unavoidable long-range attacks. Weakly subjective consensus works fine with contracts-as-automated-light-clients, since contracts are always “online”.

Note that it is possible to support atomic transactions without information transfer; TierNolan’s secret revelation protocol can be used to do this even between relatively dumb chains like BTC and DOGE. Hence, in general interaction is not too difficult.

Security

The larger problem, however, is security. Blockchains are vulnerable to 51% attacks, and smaller blockchains are vulnerable to smaller 51% attacks. Ideally, if we want security, we would like for multiple chains to be able to piggyback on each other’s security, so that no chain can be attacked unless every chain is attacked at the same time. Within this framework, there are two major paradigm choices that we can make: centralized or decentralized.

| Centralized | Decentralized |

|

|

A centralized paradigm is essentially every chain, whether directly or indirectly, piggybacking off of a single master chain; Bitcoin proponents often love to see the central chain being Bitcoin, though unfortunately it may be something else since Bitcoin was not exactly designed with the required level of general-purpose functionality in mind. A decentralized paradigm is one that looks vaguely like Ripple’s network of unique node lists, except working across chains: every chain has a list of other consensus mechanisms that it trusts, and those mechanisms together determine block validity.

The centralized paradigm has the benefit that it’s simpler; the decentralized paradigm has the benefit that it allows for a cryptoeconomy to more easily swap out different pieces for each other, so it does not end up resting on decades of outdated protocols. However, the question is, how do we actually “piggyback” on one or more other chains’ security?

To provide an answer to this question, we’ll first come up with a formalism called an assisted scoring function. In general, the way blockchains work is they have some scoring function for blocks, and the top-scoring block becomes the block defining the current state. Assisted scoring functions work by scoring blocks based on not just the blocks themselves, but also checkpoints in some other chain (or multiple chains). The general principle is that we use the checkpoints to determine that a given fork, even though it may appear to be dominant from the point of view of the local chain, can be determined to have come later through the checkpointing process.

A simple approach is that a node penalizes forks where the blocks are too far apart from each other in time, where the time of a block is determined by the median of the earliest known checkpoint of that block in the other chains; this would detect and penalize forks that happen after the fact. However, there are two problems with this approach:

- An attacker can submit the hashes of the blocks into the checkpoint chains on time, and then only reveal the blocks later

- An attacker may simply let two forks of a blockchain grow roughly evenly simultaneously, and then eventually push on his preferred fork with full force

To deal with (2), we can say that only the valid block of a given block number with the earliest average checkpointing time can be part of the main chain, thus essentially completely preventing double-spends or even censorship forks; every new block would have to point to the last known previous block. However, this does nothing against (1). To solve (1), the best general solutions involve some concept of “voting on data availability”; essentially, the participants in the checkpointing contract on each of the other chains would Schelling-vote on whether or not the entire data of the block was available at the time the checkpoint was made, and a checkpoint would be rejected if the vote leans toward “no”.

Note that there are two versions of this strategy. The first is a strategy where participants vote on data availability only (ie. that every part of the block is out there online). This allows the voters to be rather stupid, and be able to vote on availability for any blockchain; the process for determining data availability simply consists of repeatedly doing a reverse hash lookup query on the network until all the “leaf nodes” are found and making sure that nothing is missing. A clever way to force nodes to not be lazy when doing this check is to ask them to recompute and vote on the root hash of the block using a different hash function. Once all the data is available, if the block is invalid an efficient Merkle-tree proof of invalidity can be submitted to the contract (or simply published and left for nodes to download when determining whether or not to count the given checkpoint).

The second strategy is less modular: have the Schelling-vote participants vote on block validity. This would make the process somewhat simpler, but at the cost of making it more chain-specific: you would need to have the source code for a given blockchain in order to be able to vote on it. Thus, you would get fewer voters providing security for your chain automatically. Regardless of which of these two strategies is used, the chain could subsidize the Schelling-vote contract on the other chain(s) via a cross-chain exchange.

The Scalability Part

Up until now, we still don’t have any actual “scalability”; a chain is only as secure as the number of nodes that are willing to download (although not process) every block. Of course, there are solutions to this problem: challenge-response protocols and randomly selected juries, both described in the previous blog post on hypercubes, are the two that are currently best-known. However, the solution here is somewhat different: instead of setting in stone and institutionalizing one particular algorithm, we are simply going to let the market decide.

The “market” is defined as follows:

- Chains want to be secure, and want to save on resources. Chains need to select one or more Schelling-vote contracts (or other mechanisms potentially) to serve as sources of security (demand)

- Schelling-vote contracts serve as sources of security (supply). Schelling-vote contracts differ on how much they need to be subsidized in order to secure a given level of participation (price) and how difficult it is for an attacker to bribe or take over the schelling-vote to force it to deliver an incorrect result (quality).

Hence, the cryptoeconomy will naturally gravitate toward schelling-vote contracts that provide better security at a lower price, and the users of those contracts will benefit from being afforded more voting opportunities. However, simply saying that an incentive exists is not enough; a rather large incentive exists to cure aging and we’re still pretty far from that. We also need to show that scalability is actually possible.

The better of the two algorithms described in the post on hypercubes, jury selection, is simple. For every block, a random 200 nodes are selected to vote on it. The set of 200 is almost as secure as the entire set of voters, since the specific 200 are not picked ahead of time and an attacker would need to control over 40% of the participants in order to have any significant chance of getting 50% of any set of 200. If we are separating voting on data availability from voting on validity, then these 200 can be chosen from the set of all participants in a single abstract Schelling-voting contract on the chain, since it’s possible to vote on the data availability of a block without actually understanding anything about the blockchain’s rules. Thus, instead of every node in the network validating the block, only 200 validate the data, and then only a few nodes need to look for actual errors, since if even one node finds an error it will be able to construct a proof and warn everyone else.

Conclusion

So, what is the end result of all this? Essentially, we have thousands of chains, some with one application, but also with general-purpose chains like Ethereum because some applications benefit from the extremely tight interoperability that being inside a single virtual machine offers. Each chain would outsource the key part of consensus to one or more voting mechanisms on other chains, and these mechanisms would be organized in different ways to make sure they’re as incorruptible as possible. Because security can be taken from all chains, a large portion of the stake in the entire cryptoeconomy would be used to protect every chain.

It may prove necessary to sacrifice security to some extent; if an attacker has 26% of the stake then the attacker can do a 51% takeover of 51% of the subcontracted voting mechanisms or Schelling-pools out there; however, 26% of stake is still a large security margin to have in a hypothetical multi-trillion-dollar cryptoeconomy, and so the tradeoff may be worth it.

The true benefit of this kind of scheme is just how little needs to be standardized. Each chain, upon creation, can choose some number of Schelling-voting pools to trust and subsidize for security, and via a customized contract it can adjust to any interface. Merkle trees will need to be compatible with all of the different voting pools, but the only thing that needs to be standardized there is the hash algorithm. Different chains can use different currencies, using stable-coins to provide a reasonably consistent cross-chain unit of value (and, of course, these stable-coins can themselves interact with other chains that implement various kinds of endogenous and exogenous estimators). Ultimately, the vision of one of thousands of chains, with the different chains “buying services” from each other. Services might include data availability checking, timestamping, general information provision (eg. price feeds, estimators), private data storage (potentially even consensus on private data via secret sharing), and much more. The ultimate distributed crypto-economy.

The post Scalability, Part 3: On Metacoin History and Multichain appeared first on ethereum blog.

Special thanks to Robert Sams for the development of Seignorage Shares and insights regarding how to correctly value volatile coins in multi-currency systems

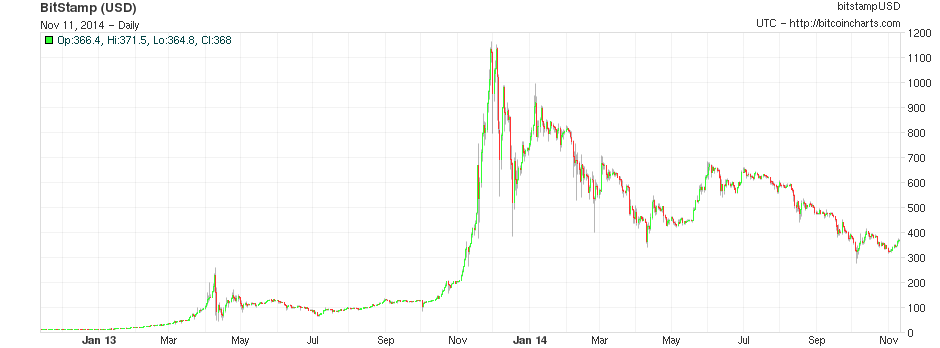

One of the main problems with Bitcoin for ordinary users is that, while the network may be a great way of sending payments, with lower transaction costs, much more expansive global reach, and a very high level of censorship resistance, Bitcoin the currency is a very volatile means of storing value. Although the currency had by and large grown by leaps and bounds over the past six years, especially in financial markets past performance is no guarantee (and by efficient market hypothesis not even an indicator) of future results of expected value, and the currency also has an established reputation for extreme volatility; over the past eleven months, Bitcoin holders have lost about 67% of their wealth and quite often the price moves up or down by as much as 25% in a single week. Seeing this concern, there is a growing interest in a simple question: can we get the best of both worlds? Can we have the full decentralization that a cryptographic payment network offers, but at the same time have a higher level of price stability, without such extreme upward and downward swings?

Last week, a team of Japanese researchers made a proposal for an “improved Bitcoin“, which was an attempt to do just that: whereas Bitcoin has a fixed supply, and a volatile price, the researchers’ Improved Bitcoin would vary its supply in an attempt to mitigate the shocks in price. However, the problem of making a price-stable cryptocurrency, as the researchers realized, is much different from that of simply setting up an inflation target for a central bank. The underlying question is more difficult: how do we target a fixed price in a way that is both decentralized and robust against attack?

To resolve the issue properly, it is best to break it down into two mostly separate sub-problems:

- How do we measure a currency’s price in a decentralized way?

- Given a desired supply adjustment to target the price, to whom do we issue and how do we absorb currency units?

Decentralized Measurement

For the decentralized measurement problem, there are two known major classes of solutions: exogenous solutions, mechanisms which try to measure the price with respect to some precise index from the outside, and endogenous solutions, mechanisms which try to use internal variables of the network to measure price. As far as exogenous solutions go, so far the only reliable known class of mechanisms for (possibly) cryptoeconomically securely determining the value of an exogenous variable are the different variants of Schellingcoin – essentially, have everyone vote on what the result is (using some set chosen randomly based on mining power or stake in some currency to prevent sybil attacks), and reward everyone that provides a result that is close to the majority consensus. If you assume that everyone else will provide accurate information, then it is in your interest to provide accurate information in order to be closer to the consensus – a self-reinforcing mechanism much like cryptocurrency consensus itself.

There are three major factors that can influence the extent of this vulnerability:

- Is it likely that the participants in a schellingcoin actually have a common incentive to bias the result in some direction?

- Do the participants have some common stake in the system that would be devalued if the system were to be dishonest?

- Is it possible to “credibly commit” to a particular answer (ie. commit to providing the answer in a way that obviously can’t be changed)?

(1) is rather problematic for single-currency systems, as if the set of participants is chosen by their stake in the currency then they have a strong incentive to pretend the currency price is lower so that the compensation mechanism will push it up, and if the set of participants is chosen by mining power then they have a strong incentive to pretend the currency’s price is too high so as to increase the issuance. Now, if there are two kinds of mining, one of which is used to select Schellingcoin participants and the other to receive a variable reward, then this objection no longer applies, and multi-currency systems can also get around the problem. (2) is true if the participant selection is based on either stake (ideally, long-term bonded stake) or ASIC mining, but false for CPU mining. However, we should not simply count on this incentive to outweigh (1).

(3) is perhaps the hardest; it depends on the precise technical implementation of the Schellingcoin. A simple implementation involving simply submitting the values to the blockchain is problematic because simply submitting one’s value early is a credible commitment. The original SchellingCoin used a mechanism of having everyone submit a hash of the value in the first round, and the actual value in the second round, sort of a cryptographic equivalent to requiring everyone to put down a card face down first, and then flip it at the same time; however, this too allows credible commitment by revealing (even if not submitting) one’s value early, as the value can be checked against the hash.

A third option is requiring all of the participants to submit their values directly, but only during a specific block; if a participant does release a submission early they can always “double-spend” it. The 12-second block time would mean that there is almost no time for coordination. The creator of the block can be strongly incentivized (or even, if the Schellingcoin is an independent blockchain, required) to include all participations, to discourage or prevent the block maker from picking and choosing answers. A fourth class of options involves some secret sharing or secure multiparty computation mechanism, using a collection of nodes, themselves selected by stake (perhaps even the participants themselves), as a sort of decentralized substitute for a centralized server solution, with all the privacy that such an approach entails.

Finally, a fifth strategy is to do the schellingcoin “blockchain-style”: every period, some random stakeholder is selected, and told to provide their vote as a [id, value] pair, where value is the actual valid and id is an identifier of the previous vote that looks correct. The incentive to vote correctly is that only tests that remain in the main chain after some number of blocks are rewarded, and future voters will note attach their vote to a vote that is incorrect fearing that if they do voters after them will reject their vote.

Schellingcoin is an untested experiment, and so there is legitimate reason to be skeptical that it will work; however, if we want anything close to a perfect price measurement scheme it’s currently the only mechanism that we have. If Schellingcoin proves unworkable, then we will have to make do with the other kinds of strategies: the endogenous ones.

Endogenous Solutions

To measure the price of a currency endogenously, what we essentially need is to find some service inside the network that is known to have a roughly stable real-value price, and measure the price of that service inside the network as measured in the network’s own token. Examples of such services include:

- Computation (measured via mining difficulty)

- Transaction fees

- Data storage

- Bandwidth provision

A slightly different, but related, strategy, is to measure some statistic that correllates indirectly with price, usually a metric of the level of usage; one example of this is transaction volume.

The problem with all of these services is, however, that none of them are very robust against rapid changes due to technological innovation. Moore’s Law has so far guaranteed that most forms of computational services become cheaper at a rate of 2x every two years, and it could easily speed up to 2x every 18 months or 2x every five years. Hence, trying to peg a currency to any of those variables will likely lead to a system which is hyperinflationary, and so we need some more advanced strategies for using these variables to determine a more stable metric of the price.

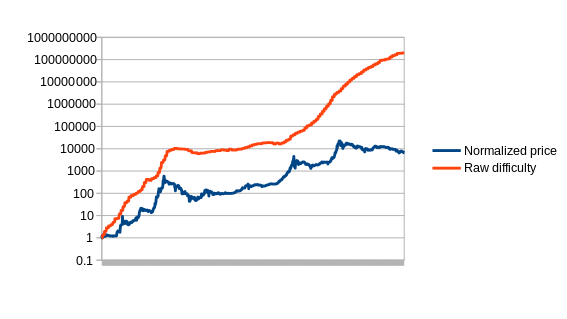

First, let us set up the problem. Formally, we define an estimator to be a function which receives a data feed of some input variable (eg. mining difficulty, transaction cost in currency units, etc) D[1], D[2], D[3]…, and needs to output a stream of estimates of the currency’s price, P[1], P[2], P[3]… The estimator obviously cannot look into the future; P[i] can be dependent on D[1], D[2] … D[i], but not D[i+1]. Now, to start off, let us graph the simplest possible estimator on Bitcoin, which we’ll call the naive estimator: difficulty equals price.

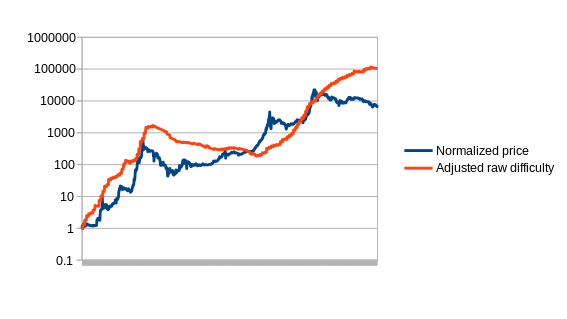

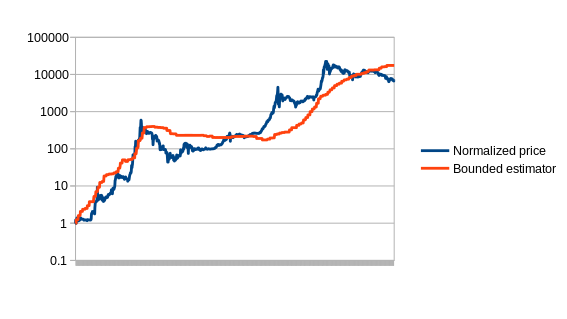

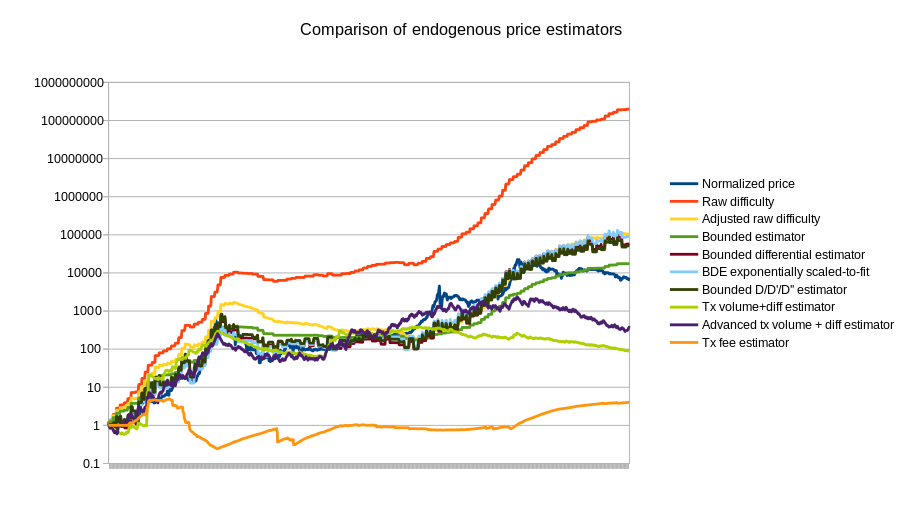

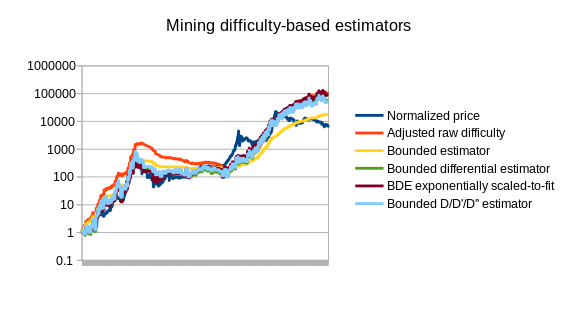

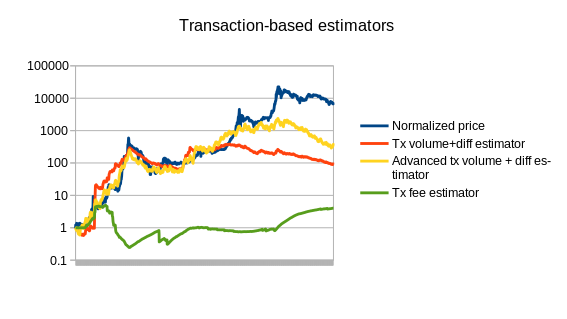

The way that we will select the parameter for our version is by using a variant of simulated annealing to find the optimal values, using the first 780 days of the Bitcoin price as “training data”. The estimators are then left to perform as they would for the remaining 780 days, to see how they would react to conditions that were unknown when the parameters were optimized (this technique, knows as “cross-validation”, is standard in machine learning and optimization theory). The optimal value for the compensated estimator is a drop of 0.48% per day, leading to this chart:

Note that the chart also includes three estimators that use statistics other than Bitcoin mining: a simple and an advanced estimator using transaction volume, and an estimator using the average transaction fee. We can also split up the mining-based estimators from the other estimators:

|

|

See https://github.com/ethereum/economic-modeling/tree/master/stability for the source code that produced these results.

Of course, this is only the beginning of endogenous price estimator theory; a more thorough analysis involving dozens of cryptocurrencies will likely go much further. The best estimators may well end up using a combination of different measures; seeing how the difficulty-based estimators overshot the price in 2014 and the transaction-based estimators undershot the price, the two combined could end up being substantially more accurate. The problem is also going to get easier over time as we see the Bitcoin mining economy stabilize toward something closer to an equilibrium where technology improves only as fast as the general Moore’s law rule of 2x every 2 years.

The other issue that all of these estimators have to contend with is exploitability: if transaction volume is used to determine the currency’s price, then an attacker can manipulate the price very easily by simply sending very many transactions. The average transaction fees paid in Bitcoin are about $ 5000 per day; at that price in a stabilized currency the attacker would be able to halve the price. Mining difficulty, however, is much more difficult to exploit simply because the market is so large. If a platform does not want to accept the inefficiencies of wasteful proof of work, an alternative is to build in a market for other resources, such as storage, instead; Filecoin and Permacoin are two efforts that attempt to use a decentralized file storage market as a consensus mechanism, and the same market could easily be dual-purposed to serve as an estimator.

The Issuance Problem

Now, even if we have a reasonably good, or even perfect, estimator for the currency’s price, we still have the second problem: how do we issue or absorb currency units? The simplest approach is to simply issue them as a mining reward, as proposed by the Japanese researchers. However, this has two problems:

- Such a mechanism can only issue new currency units when the price is too high; it cannot absorb currency units when the price is too low.

- If we are using mining difficulty in an endogenous estimator, then the estimator needs to take into account the fact that some of the increases in mining difficulty will be a result of an increased issuance rate triggered by the estimator itself.

If not handled very carefully, the second problem has the potential to create some rather dangerous feedback loops in either direction; however, if we use a different market as an estimator and as an issuance model then this will not be a problem. The first problem seems serious; in fact, one can interpret it as saying that any currency using this model will always be strictly worse than Bitcoin, because Bitcoin will eventually have an issuance rate of zero and a currency using this mechanism will have an issuance rate always above zero. Hence, the currency will always be more inflationary, and thus less attractive to hold. However, this argument is not quite true; the reason is that when a user purchases units of the stabilized currency then they have more confidence that at the time of purchase the units are not already overvalued and therefore will soon decline. Alternatively, one can note that extremely large swings in price are justified by changing estimations of the probability the currency will become thousands of times more expensive; clipping off this possibility will reduce the upward and downward extent of these swings. For users who care about stability, this risk reduction may well outweigh the increased general long-term supply inflation.

BitAssets

A second approach is the (original implementation of the) “bitassets” strategy used by Bitshares. This approach can be described as follows:

- There exist two currencies, “vol-coins” and “stable-coins”.

- Stable-coins are understood to have a value of $ 1.

- Vol-coins are an actual currency; users can have a zero or positive balance of them. Stable-coins exist only in the form of contracts-for-difference (ie. every negative stable-coin is really a debt to someone else, collateralized by at least 2x the value in vol-coins, and every positive stable-coin is the ownership of that debt).

- If the value of someone’s stable-coin debt exceeds 90% of the value of their vol-coin collateral, the debt is cancelled and the entire vol-coin collateral is transferred to the counterparty (“margin call”)

- Users are free to trade vol-coins and stable-coins with each other.

And that’s it. The key piece that makes the mechanism (supposedly) work is the concept of a “market peg”: because everyone understands that stable-coins are supposed to be worth $ 1, if the value of a stable-coin drops below $ 1, then everyone will realize that it will eventually go back to $ 1, and so people will buy it, so it actually will go back to $ 1 – a self-fulfilling prophecy argument. And for a similar reason, if the price goes above $ 1, it will go back down. Because stable-coins are a zero-total-supply currency (ie. each positive unit is matched by a corresponding negative unit), the mechanism is not intrinsically unworkable; a price of $ 1 could be stable with ten users or ten billion users (remember, fridges are users too!).

However, the mechanism has some rather serious fragility properties. Sure, if the price of a stable-coin goes to $ 0.95, and it’s a small drop that can easily be corrected, then the mechanism will come into play, and the price will quickly go back to $ 1. However, if the price suddenly drops to $ 0.90, or lower, then users may interpret the drop as a sign that the peg is actually breaking, and will start scrambling to get out while they can – thus making the price fall even further. At the end, the stable-coin could easily end up being worth nothing at all. In the real world, markets do often show positive feedback loops, and it is quite likely that the only reason the system has not fallen apart already is because everyone knows that there exists a large centralized organization (BitShares Inc) which is willing to act as a buyer of last resort to maintain the “market” peg if necessary.

Note that BitShares has now moved to a somewhat different model involving price feeds provided by the delegates (participants in the consensus algorithm) of the system; hence the fragility risks are likely substantially lower now.

SchellingDollar

An approach vaguely similar to BitAssets that arguably works much better is the SchellingDollar (called that way because it was originally intended to work with the SchellingCoin price detection mechanism, but it can also be used with endogenous estimators), defined as follows:

- There exist two currencies, “vol-coins” and “stable-coins”. Vol-coins are initially distributed somehow (eg. pre-sale), but initially no stable-coins exist.

- Users may have only a zero or positive balance of vol-coins. Users may have a negative balance of stable-coins, but can only acquire or increase their negative balance of stable-coins if they have a quantity of vol-coins equal in value to twice their new stable-coin balance (eg. if a stable-coin is $ 1 and a vol-coin is $ 5, then if a user has 10 vol-coins ($ 50) they can at most reduce their stable-coin balance to -25)

- If the value of a user’s negative stable-coins exceeds 90% of the value of the user’s vol-coins, then the user’s stable-coin and vol-coin balances are both reduced to zero (“margin call”). This prevents situations where accounts exist with negative-valued balances and the system goes bankrupt as users run away from their debt.

- Users can convert their stable-coins into vol-coins or their vol-coins into stable-coins at a rate of $ 1 worth of vol-coin per stable-coin, perhaps with a 0.1% exchange fee. This mechanism is of course subject to the limits described in (2).

- The system keeps track of the total quantity of stable-coins in circulation. If the quantity exceeds zero, the system imposes a negative interest rate to make positive stable-coin holdings less attractive and negative holdings more attractive. If the quantity is less than zero, the system similarly imposes a positive interest rate. Interest rates can be adjusted via something like a PID controller, or even a simple “increase or decrease by 0.2% every day based on whether the quantity is positive or negative” rule.

Here, we do not simply assume that the market will keep the price at $ 1; instead, we use a central-bank-style interest rate targeting mechanism to artificially discourage holding stable-coin units if the supply is too high (ie. greater than zero), and encourage holding stable-coin units if the supply is too low (ie. less than zero). Note that there are still fragility risks here. First, if the vol-coin price falls by more than 50% very quickly, then many margin call conditions will be triggered, drastically shifting the stable-coin supply to the positive side, and thus forcing a high negative interest rate on stable-coins. Second, if the vol-coin market is too thin, then it will be easily manipulable, allowing attackers to trigger margin call cascades.

Another concern is, why would vol-coins be valuable? Scarcity alone will not provide much value, since vol-coins are inferior to stable-coins for transactional purposes. We can see the answer by modeling the system as a sort of decentralized corporation, where “making profits” is equivalent to absorbing vol-coins and “taking losses” is equivalent to issuing vol-coins. The system’s profit and loss scenarios are as follows:

- Profit: transaction fees from exchanging stable-coins for vol-coins

- Profit: the extra 10% in margin call situations

- Loss: situations where the vol-coin price falls while the total stable-coin supply is positive, or rises while the total stable-coin supply is negative (the first case is more likely to happen, due to margin-call situations)

- Profit: situations where the vol-coin price rises while the total stable-coin supply is positive, or falls while it’s negative

Note that the second profit is in some ways a phantom profit; when users hold vol-coins, they will need to take into account the risk that they will be on the receiving end of this extra 10% seizure, which cancels out the benefit to the system from the profit existing. However, one might argue that because of the Dunning-Kruger effect users might underestimate their susceptibility to eating the loss, and thus the compensation will be less than 100%.

Now, consider a strategy where a user tries to hold on to a constant percentage of all vol-coins. When x% of vol-coins are absorbed, the user sells off x% of their vol-coins and takes a profit, and when new vol-coins equal to x% of the existing supply are released, the user increases their holdings by the same portion, taking a loss. Thus, the user’s net profit is proportional to the total profit of the system.

Seignorage Shares

A fourth model is “seignorage shares”, courtesy of Robert Sams. Seignorage shares is a rather elegant scheme that, in my own simplified take on the scheme, works as follows:

- There exist two currencies, “vol-coins” and “stable-coins” (Sams uses “shares” and “coins”, respectively)

- Anyone can purchase vol-coins for stable-coins or vol-coins for stable-coins from the system at a rate of $ 1 worth of vol-coin per stable-coin, perhaps with a 0.1% exchange fee

Note that in Sams’ version, an auction was used to sell off newly-created stable-coins if the price goes too high, and buy if it goes too low; this mechanism basically has the same effect, except using an always-available fixed price in place of an auction. However, the simplicity comes at the cost of some degree of fragility. To see why, let us make a similar valuation analysis for vol-coins. The profit and loss scenarios are simple:

- Profit: absorbing vol-coins to issue new stable-coins

- Loss: issuing vol-coins to absorb stable-coins

The same valuation strategy applies as in the other case, so we can see that the value of the vol-coins is proportional to the expected total future increase in the supply of stable-coins, adjusted by some discounting factor. Thus, here lies the problem: if the system is understood by all parties to be “winding down” (eg. users are abandoning it for a superior competitor), and thus the total stable-coin supply is expected to go down and never come back up, then the value of the vol-coins drops below zero, so vol-coins hyperinflate, and then stable-coins hyperinflate. In exchange for this fragility risk, however, vol-coins can achieve a much higher valuation, so the scheme is much more attractive to cryptoplatform developers looking to earn revenue via a token sale.

Note that both the SchellingDollar and seignorage shares, if they are on an independent network, also need to take into account transaction fees and consensus costs. Fortunately, with proof of stake, it should be possible to make consensus cheaper than transaction fees, in which case the difference can be added to profits. This potentially allows for a larger market cap for the SchellingDollar’s vol-coin, and allows the market cap of seignorage shares’ vol-coins to remain above zero even in the event of a substantial, albeit not total, permanent decrease in stable-coin volume. Ultimately, however, some degree of fragility is inevitable: at the very least, if interest in a system drops to near-zero, then the system can be double-spent and estimators and Schellingcoins exploited to death. Even sidechains, as a scheme for preserving one currency across multiple networks, are susceptible to this problem. The question is simply (1) how do we minimize the risks, and (2) given that risks exist, how do we present the system to users so that they do not become overly dependent on something that could break?

Conclusions

Are stable-value assets necessary? Given the high level of interest in “blockchain technology” coupled with disinterest in “Bitcoin the currency” that we see among so many in the mainstream world, perhaps the time is ripe for stable-currency or multi-currency systems to take over. There would then be multiple separate classes of cryptoassets: stable assets for trading, speculative assets for investment, and Bitcoin itself may well serve as a unique Schelling point for a universal fallback asset, similar to the current and historical functioning of gold.

If that were to happen, and particularly if the stronger version of price stability based on Schellingcoin strategies could take off, the cryptocurrency landscape may end up in an interesting situation: there may be thousands of cryptocurrencies, of which many would be volatile, but many others would be stable-coins, all adjusting prices nearly in lockstep with each other; hence, the situation could even end up being expressed in interfaces as a single super-currency, but where different blockchains randomly give positive or negative interest rates, much like Ferdinando Ametrano’s “Hayek Money”. The true cryptoeconomy of the future may have not even begun to take shape.

The post The Search for a Stable Cryptocurrency appeared first on ethereum blog.

Hi, I’m Stephan Tual, and I’ve been responsible for Ethereum’s adoption and education since January as CCO. I’m also leading our UK ÐΞV hub, located at Co-Work in Putney (South West London).

I feel really privileged to be able to lead the effort on the communication strategy at ÐΞV. For the very first time, we’re seeing the mainstream public take a genuine interest in the potential of decentralisation. The feeling of excitement about what ‘could be’ when I first read Vitalik’s whitepaper on that fateful Christmas afternoon is now shared by dozens of thousands of technologists, developers and entrepreneurs.

Thanks to the Ether sale, a group of smart, hardworking individuals is now able to work full time on solving core technical and adoption challenges, and to deliver a solution at 10x the speed an equivalent garage-based initiative would have taken. With Ethereums’ APIs supporting Gav’s vision for web 3, it finally is in the reach of the community to build decentralized applications without middlemen. By democratizing access to programmable blockchain technology, Ethereum empowers software developers and entrepreneurs to make a major impact on not only the decentralisation of the economy, but also social structures, voting mechanisms and so much more. It’s a very ambitious project, and everyone at ÐΞV feels a strong sense of duty to to deliver on this vision.

As part of our efforts, technology is – of course – key, but so is adoption. Ethereum without dapps (decentralized apps) would be akin to a video game console without launch titles, and, just like any protocol, we expect the applications to be the real stars of the show. Here’s how we plan to spread the word and support developers in their efforts.

Education

Building a curriculum: We’re building an extensive curriculum adapted to both teachers and self-learners at home, at hackathons and in universities around the globe. Consisting of well defined modules progressing over time in their complexity, our goal is to establish a learning standard that will be of course completely free of charge and 100% Open Source.

Content aggregation: at the moment we are aware that information on how to ‘get into’ Ethereum is a little bit fragmented between forums, multiple wikis and various 3rd party sites. A subdomain to our website will be created during the course of the next few months to access this valuable information easily and in one place.

Produce tutorials, videos and articles: tutorials are key to learn a new set of languages and tools. By producing both videos and text-based tutorials, we intend to give the community an insiders’s view on best practices, from structuring contract storage to leveraging the new whisper P2P messaging system for example.

CodeAcademy-like site: not everyone likes to learn within a classroom environment, and some feel constrained by linear tutorials. With a release date coinciding with the launch of Ethereum, we’re partnering with a US-based company to build a CodeAcademy-like site within a gamified environment, where you’ll be able to learn at your own pace how to build dapps and their backend contracts.

University chapters: Vitalik and I recently gave a presentation at Cambridge University and Ethereum will participate in the Hackathon on Transparency on November 26 at the University of Geneva. Encouraged by the enthusiasm we’ve witnessed in the academic world, we are working to support directly the Oxbridge Blocktech Network (OBN) in their efforts to build a network of chapters, firstly within the UK then throughout Europe.

I’m happy to announce that Ken Kappler has joined the UK team to help with these educational efforts. Many of you in London know Ken as he’s been a semi-permanent fixture at all our meetups and hackathons, kindly helping behind the scenes. Ken, known as BlueChain on IRC, is also the writer behind http://dappsforbeginners.wordpress.com/ which will soon merge with our own education site.

Ken will lead a weekly ‘Ethereum Clinic’ on IRC to answer any questions you might have with your current project. Times will be posted on our forums.

Meetups

Encouraging the creation of new meetups: we now have an extensive network of 85 meetups worldwide, which is an amazing achievement but not sufficient to handle the overwhelming demand for regular catchups in a format that’s appropriate for the local needs and culture. We intend to encourage the creation of new meetups in almost every country and major urban hubs.

Tooling and support: in order to drive the effort to create and maintain such a large network of international meetups, we will be providing tools for meetup leaders to interact with each other, gain access to the core dev team for video-conference or physical interventions, and exchange information about speakers. These tools will of course be free to use and access.

Collaterals and venues: for the meetups that are the most active, Ethereum is considering, where appropriate, the use of small bursaries so that meetup leaders in these ‘core locales’ do not have to contend with the full costs of collaterals and venues. We will also work with our partners to help meetups secure sponsorships and access to free locations to hold their mini-conferences.

Global Hackathon: Starting this week, the Ethereum workshops are going to slowly transform into proper hackathons. We are working with wonderful locations around the globe, the vast majority of which started off as Ethereum meetups, to organize a worldwide hackathon with some great ETH prizes for the best dapps.

We’re very lucky to welcome Anthony D’onofrio to drive these very important initiatives. Anthony starts on the 10th of this month and will also cover the North American region from a community perspective – you probably already know him as ‘Texture’, his handle on most forums and channels.

Community

I’m incredibly proud that Ethereum’s exposure in the community has been entirely organic since day one. This has been the result of major, time consuming efforts to identify Ethereum projects in the wild, reaching out directly and building a strong relationship with our user base. We have achieved several key milestones, including over 10,000 followers on Twitter, 100,000 page views per month on our website, similar numbers on our forums and the growth trend only continues to accelerate.

Historically, Ethereum has never used PR as a tool to increase adoption, relying instead on word of mouth, meetups and conferences to spread the word. As the media attention is now intensifying rapidly, I’m pleased to welcome Freya Stevens to the team as PR/Marketing lead. Freya will help us build a shared database of media leads, write articles and make complex technology palatable to the general public while identifying strong story angles. Freya is based out of Cambridge, UK.

Also with a view to to scale up these initiatives we’re proud to welcome George Hallam to the team, AKA thehighfiveghost, who recently posted a survey so you can let Ethereum know how well we’re doing our job as custodians and developers of the platform. George, as a key supporter to the London community, will be already be familiar to many.

As part of these efforts, expect to see a lot more interactions on Reddit, IRC, Discuss and of course our very own forums. George will also help me identify key Ethereum-based projects and make contact to see how we can best help with information, connections and inclusion as guests in our weekly video updates, shot at our Putney Studio.

In order to produce very high quality content, we are also welcoming Ian Meikle to the London Hub. Ian is the creator of most of the video materials you might have seen relating to Ethereum, including the superb video loop that has been a staple at many Ethereum meetups. Ian will leverage the equipment at our studio to create explainer videos, interview key players in the space, and record panels led by Vinay Gupta, who joins the coms team as Strategic Consultant.

In London, and above and beyond our existing panels, socials and hackathons, regular ‘show and tell’ are being scheduled for dapp developers to present their work and receive feedback, a model we intend to promote internationally within the month.

And of course, last but not least, expect a major refresh to our website, with beautiful, clean content, practical examples of dapps, a dynamic meetup map and links to all our newly created assets and community points of contacts.

In conclusion

The question we’re going to continue asking ourselves everyday is how do we support you, the community, in building kick-ass dapps and being successful in your venture on our platform. I hope the above gives you a quick intro as to our plans. I’ll be issuing regular updates both on this blog and on our youtube.

The post Ethereum Community and Adoption Update – Week 1 appeared first on ethereum blog.

I thought it was about time I’d give an update on my side of things for those interested in knowing how we’re doing on the Dutch side. My name is Jeff, a founder of Ethereum and one of the three directors (alongside Vitalik and Gavin) of Ethereum ÐΞV, the development entity building Ethereum and all the associated tech.

Over the past months I’ve been been looking for a suitable office space to host the Amsterdam Hub. Unfortunately it takes more work than I initially anticipated and have got nothing to show for so far. I’ve past on this tasks to my good friend and now colleague Maran. Maran will do all he can to find the best suitable place for the Ams hub and the development of Mist. Those who are using the Ethereum Programming language mutan you may want to switch over to Serpent or the soon to be de-facto programming language Solidity. I’ve made the sensible decision to focus my attention on to more pressing matters such as the development of the protocol and the browser. Perhaps in the future I’ll have time to pick up it’s development again.

ÐΞV Amsterdam

The lawyers have finally, after 2 months, gotten around to set up the company here in Amsterdam (ugh, the Dutch and their bureaucracy eh) and we’ve found a bank that is willing to accept us as their loyal customers (…). At the moment we have a few options for our office space and I’ll write about them as soon as I know something more concrete.

The Team

It’s about time the Ams team got a proper introduction. These guys do some serious good work!

The first that joined the Ams team is Alex van de Sande (aka avsa). Alex is a gifted UX engineer and he’s been with us for quite a while. It was only a matter of time before he became an official member of the ÐΞV team. Alex has taken up the task of UI development and UX expert and is prototyping the latest of the Web3 browser.

The second that joined the team is Viktor Trón. Vik is a crazy math-head and is currently hacking away at the new DEVP2P and testing it rigourously. I’ve known Vik all the way back since the start of the project somewhere in Jan/Feb, he’s a great guy and a real asset to this team.

The third that joined the team is Felix Lange. Felix is a die-hard Gopher (yay!) and the first thing he pointed out to me was that I had done a bad job looking after my go routines and there were a lot of race conditions, so nice of him (-; Felix is going to work on the Whisper implementation once the spec has been formally finalised. Felix is a super star gopher and has the ability to become a true Ethereum Core Dev.

The fourth that joined the team is Daniel Nagy. Daniel has a history in crypto and security and his first tasks is to create a comprehensive spec for our DHT implementation and the development thereof.

Last but certainly not least is Maran Hidskes. Maran has been on this team before but in a completely different role. Maran used to work on the protocol but after spawning a crying, -peeing, -pooping machine new member of his family he decided to take some time off. Now his main role is to look after the daunting task; the administration of ÐΞV Amsterdam.

Even though they are not on anyone’s team, I like to thank Nick, Caktux and Joris for their ongoing effort in developing out our build systems. I’d also like to thank Nick specifically for pointing out the inconsistencies between our implementations: Nick, you truly are a great pain in my ass (-;

Onwards

While we are marching towards the next instalment in the Proof of Concept (PoC-7) we still have got quite some work ahead of us.

Recently I’ve started to build a toolset so we may test out Christoph (he’s on the Berlin team) awesome tests suit. Christoph has put a tremendous amount of work in developing out a proper testing suit for the Ethereum protocol. I never knew people could enjoy writing tests like you do, you’ve got my uttermost most respect.

I’ve also started a cross-implementation JavaScript framework called ethereum.js. Ethereum.js is quickly gaining adoption from the rest of the Ether Hackers and is already in use by the Go websocket & JSON RPC implementation, C++ JSON RPC implementation and the Node.js implementation. Ethereum.js is a true ÐΞV cross implementation team effort.

Our Polish partners at IMAPP (Paweł and Artur) have completed their first implementation of the JIT-compiled LLVM-based EVM implementation and have agreed to create a Go bridge so that Mist may benefit from the speed increase in running Ethereum contracts mentioned earlier in Gav’s update.

Fin

I shall try to keep up writing blog post with updates regarding Mist, the protocol and ÐΞV in general so stay tuned!

The post Jeff’s Ethereum ÐΞV Update I appeared first on ethereum blog.

Well… what a busy two weeks. I thought it about time to make another update for any of you who might be interested in how we’re doing. If you don’t already know, I’m Gavin, a founder of Ethereum and one of the three directors (alongside Vitalik and Jeffrey) of Ethereum ÐΞV, the development entity building Ethereum and all the associated technology.

After doing some recruitment on behalf of DEV in Bucharest with the help of Mihai Alisie and the lovely Roxanna Sureanu I spent the last week at my home (and coincidentally, the Ethereum HQ) in Zug, Switzerland. During this time I was able to get going on the first prototype of Whisper, our secure identity-based communications protocol, finishing with a small IRC-like ÐApp demonstrating how easy it is to use. For those interested, there is more information on Whisper in the Ethereum Github wiki and a nice little screenshot on my twitter feed. In addition to this I’ve been helping finalise the soon-to-be-announced PoC-7 specification and working towards a PoC-8 (final). Finally, during our brief time together in Zug, Jeffrey, Vitalik and I drafted our strategy concerning identity and key management; this will be developed further during the coming weeks.

ÐΞVHUB Berlin

In Berlin, Sarah has been super-busy with the builders getting the hub ready. Here’s a couple pictures of the work in progress that is the Berlin hub. It might not look like much yet, but we’re on target to be moved in by mid-November. I’m particularly happy with Sarah’s efforts to find a genuine 70′s barrista espresso machine (-:

I’m excited to announce that Christian Vömel is joining the team in Berlin to be the Office Manager of ÐΞVHUB Berlin. Christian has many years experience including having worked in an international environment and has even taught office management! He’ll be taking some of the load from our frankly much-overworked company secretary Aeron Buchanan.

The Team Grows

We’ve finalised a number of new hires over the past couple of weeks: Network engineer Lefteris Karapetsas will be joining the Berlin team imminently. Having considerable experience with state-of-the-art network traffic analysis and deep-packet inspection systems, he’ll be helping audit our network protocols, however (like much of our team) truly multidisciplinary, he’ll also be working on NatSpec, the code name for our Natural Language Formal Contract Specification system, a cornerstone of our transaction security model.

I’m happy to announce that Ian Meikle, the accomplished videographer who co-authored the impressive “Koyaanis-glitchy” Ethereum brand video has been moved to ÐΞV to help with the communications team. He who shall be known only as Texture has also joined the comms side with Stephan to help with the strategy stateside and coordinate the worldwide meetup and hackathon network. Great to see such a capable and passionate designer on the team; I know he has a good few ideas for ÐApps!

Two more hires under Stephan in the comms team include Ken Kappler, handling the developer education direction, hackathons, ethereum curriculum and university partnerships. George Hallam has also been employed to evangelize ethereum to startups and partners, boost the reach of our formal network and generally help Stephan in the quest of having everybody know what Ethereum is and how it can help them.

Jeff’s team has also been expanded recently too; he’ll be telling you about his developments in an imminent post.

Further Developments

Aside from the aforementioned progress with Whisper and PoC-7, Christoph has been continuing his great work with the tests repository. Christian has been making great progress with the Solidity language having recently placed the first Solidity-compiled program onto the testnet block chain only a few days ago.

Marek has studiously been moving C++ over to a JSON-RPC and Javascript front-end fundamentally unified and bound to the Go client. Alex meanwhile has been grappling with the C++ crypto back-end and has done a great job of reducing bloat and extraneous dependencies.

Of late, the comms team has some good news brewing, in particular, it is in contact with some world-class education establishments regarding the possibility of eduction partnership and the formation of a network of chapters both in the UK and internationally. Watch this space (-:

Finally, our Polish partners at IMAPP (Paweł and Artur) have completed their first implementation of the JIT-compiled LLVM-based EVM implementation. They are reporting an average of 30x speedup (as high as 100x!) for non-external EVM instructions over the already best-in-class basic C++-based EVM implementation. Brilliant work and we’re looking forward to more improvements and optimisations yet.

And the rest…

So much to come; there are a couple of announcements (including a slew of imminent hires) I’d love to make but they need to be finalised before I can write about them here. Look out for the next update!

The post Gav’s Ethereum ÐΞV Update II appeared first on ethereum blog.

An important and controversial topic in the area of personal wallet security is the concept of “brainwallets” – storing funds using a private key generated from a password memorized entirely in one’s head. Theoretically, brainwallets have the potential to provide almost utopian guarantee of security for long-term savings: for as long as they are kept unused, they are not vulnerable to physical theft or hacks of any kind, and there is no way to even prove that you still remember the wallet; they are as safe as your very own human mind. At the same time, however, many have argued against the use of brainwallets, claiming that the human mind is fragile and not well designed for producing, or remembering, long and fragile cryptographic secrets, and so they are too dangerous to work in reality. Which side is right? Is our memory sufficiently robust to protect our private keys, is it too weak, or is perhaps a third and more interesting possibility actually the case: that it all depends on how the brainwallets are produced?

Entropy

If the challenge at hand is to create a brainwallet that is simultaneously memorable and secure, then there are two variables that we need to worry about: how much information we have to remember, and how long the password takes for an attacker to crack. As it turns out, the challenge in the problem lies in the fact that the two variables are very highly correlated; in fact, absent a few certain specific kinds of special tricks and assuming an attacker running an optimal algorithm, they are precisely equivalent (or rather, one is precisely exponential in the other). However, to start off we can tackle the two sides of the problem separately.

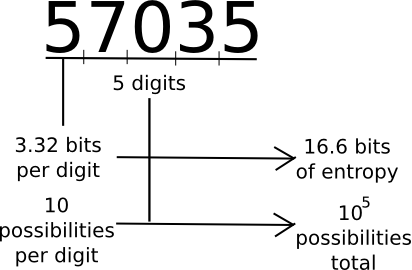

A common measure that computer scientists, cryptogaphers and mathematicians use to measure “how much information” a piece of data contains is “entropy”. Loosely defined, entropy is defined as the logarithm of the number of possible messages that are of the same “form” as a given message. For example, consider the number 57035. 57035 seems to be in the category of five-digit numbers, of which there are 100000. Hence, the number contains about 16.6 bits of entropy, as 216.6 ~= 100000. The number 61724671282457125412459172541251277 is 35 digits long, and log(1035) ~= 116.3, so it has 116.3 bits of entropy. A random string of ones and zeroes n bits long will contain exactly n bits of entropy. Thus, longer strings have more entropy, and strings that have more symbols to choose from have more entropy.

On the other hand, the number 11111111111111111111111111234567890 has much less than 116.3 bits of entropy; although it has 35 digits, the number is not of the category of 35-digit numbers, it is in the category of 35-digit numbers with a very high level of structure; a complete list of numbers with at least that level of structure might be at most a few billion entries long, giving it perhaps only 30 bits of entropy.

Information theory has a number of more formal definitions that try to grasp this intuitive concept. A particularly popular one is the idea of Kolmogorov complexity; the Kolmogorov complexity of a string is basically the length of the shortest computer program that will print that value. In Python, the above string is also expressible as '1'*26+'234567890' – an 18-character string, while 61724671282457125412459172541251277 takes 37 characters (the actual digits plus quotes). This gives us a more formal understanding of the idea of “category of strings with high structure” – those strings are simply the set of strings that take a small amount of data to express. Note that there are other compression strategies we can use; for example, unbalanced strings like 1112111111112211111111111111111112111 can be cut by at least half by creating special symbols that represent multiple 1s in sequence. Huffman coding is an example of an information-theoretically optimal algorithm for creating such transformations.

Finally, note that entropy is context-dependent. The string “the quick brown fox jumped over the lazy dog” may have over 100 bytes of entropy as a simple Huffman-coded sequence of characters, but because we know English, and because so many thousands of information theory articles and papers have already used that exact phrase, the actual entropy is perhaps around 25 bytes – I might refer to it as “fox dog phrase” and using Google you can figure out what it is.

So what is the point of entropy? Essentially, entropy is how much information you have to memorize. The more entropy it has, the harder to memorize it is. Thus, at first glance it seems that you want passwords that are as low-entropy as possible, while at the same time being hard to crack. However, as we will see below this way of thinking is rather dangerous.

Strength

Now, let us get to the next point, password security against attackers. The security of a password is best measured by the expected number of computational steps that it would take for an attacker to guess your password. For randomly generated passwords, the simplest algorithm to use is brute force: try all possible one-character passwords, then all two-character passwords, and so forth. Given an alphabet of n characters and a password of length k, such an algorithm would crack the password in roughly nk time. Hence, the more characters you use, the better, and the longer your password is, the better.

There is one approach that tries to elegantly combine these two strategies without being too hard to memorize: Steve Gibson’s haystack passwords. As Steve Gibson explains:

Which of the following two passwords is stronger, more secure, and more difficult to crack?