Warning: this post contains crazy ideas. Myself describing a crazy idea should NOT be construed as implying that (i) I am certain that the idea is correct/viable, (ii) I have an even >50% probability estimate that the idea is correct/viable, or that (iii) “Ethereum” endorses any of this in any way.

One of the common questions that many in the crypto 2.0 space have about the concept of decentralized autonomous organizations is a simple one: what are DAOs good for? What fundamental advantage would an organization have from its management and operations being tied down to hard code on a public blockchain, that could not be had by going the more traditional route? What advantages do blockchain contracts offer over plain old shareholder agreements? Particularly, even if public-good rationales in favor of transparent governance, and guarnateed-not-to-be-evil governance, can be raised, what is the incentive for an individual organization to voluntarily weaken itself by opening up its innermost source code, where its competitors can see every single action that it takes or even plans to take while themselves operating behind closed doors?

There are many paths that one could take to answering this question. For the specific case of non-profit organizations that are already explicitly dedicating themselves to charitable causes, one can rightfully say that the lack of individual incentive; they are already dedicating themselves to improving the world for little or no monetary gain to themselves. For private companies, one can make the information-theoretic argument that a governance algorithm will work better if, all else being equal, everyone can participate and introduce their own information and intelligence into the calculation – a rather reasonable hypothesis given the established result from machine learning that much larger performance gains can be made by increasing the data size than by tweaking the algorithm. In this article, however, we will take a different and more specific route.

What is Superrationality?

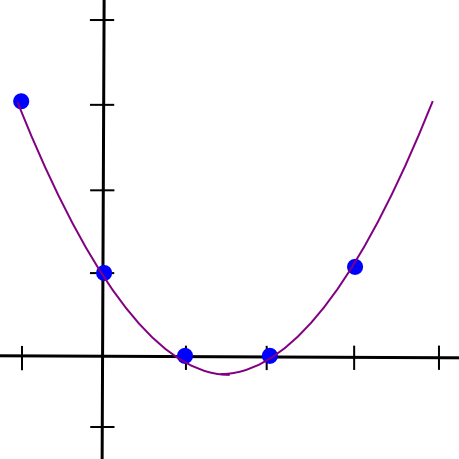

In game theory and economics, it is a very widely understood result that there exist many classes of situations in which a set of individuals have the opportunity to act in one of two ways, either “cooperating” with or “defecting” against each other, such that everyone would be better off if everyone cooperated, but regardless of what others do each indvidual would be better off by themselves defecting. As a result, the story goes, everyone ends up defecting, and so people’s individual rationality leads to the worst possible collective result. The most common example of this is the celebrated Prisoner’s Dilemma game.

Since many readers have likely already seen the Prisoner’s Dilemma, I will spice things up by giving Eliezer Yudkowsky’s rather deranged version of the game:

However, substance S can only be produced by working with [a strange AI from another dimension whose only goal is to maximize the quantity of paperclips] – substance S can also be used to produce paperclips. The paperclip maximizer only cares about the number of paperclips in its own universe, not in ours, so we can’t offer to produce or threaten to destroy paperclips here. We have never interacted with the paperclip maximizer before, and will never interact with it again.

Both humanity and the paperclip maximizer will get a single chance to seize some additional part of substance S for themselves, just before the dimensional nexus collapses; but the seizure process destroys some of substance S.

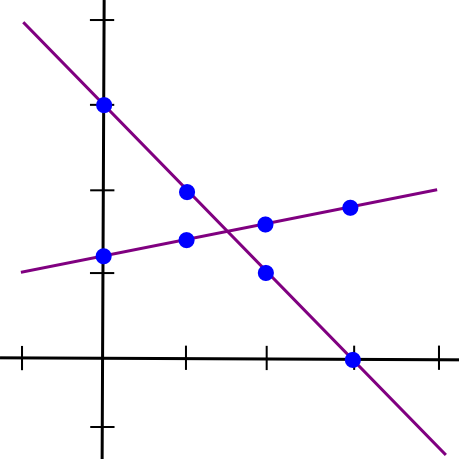

The payoff matrix is as follows:

| Humans cooperate | Humans defect | |

| AI cooperates | 2 billion lives saved, 2 paperclips gained | 3 billion lives, 0 paperclips |

| AI defects | 0 lives, 3 paperclips | 1 billion lives, 1 paperclip |

From our point of view, it obviously makes sense from a practical, and in this case moral, standpoint that we should defect; there is no way that a paperclip in another universe can be worth a billion lives. From the AI’s point of view, defecting always leads to one extra paperclip, and its code assigns a value to human life of exactly zero; hence, it will defect. However, the outcome that this leads to is clearly worse for both parties than if the humans and AI both cooperated – but then, if the AI was going to cooperate, we could save even more lives by defecting ourselves, and likewise for the AI if we were to cooperate.

In the real world, many two-party prisoner’s dilemmas on the small scale are resolved through the mechanism of trade and the ability of a legal system to enforce contracts and laws; in this case, if there existed a god who has absolute power over both universes but cared only about compliance with one’s prior agreements, the humans and the AI could sign a contract to cooperate and ask the god to simultaneously prevent both from defecting. When there is no ability to pre-contract, laws penalize unilateral defection. However, there are still many situations, particularly when many parties are involved, where opportunities for defection exist:

- Alice is selling lemons in a market, but she knows that her current batch is low quality and once customers try to use them they will immediately have to throw them out. Should she sell them anyway? (Note that this is the sort of marketplace where there are so many sellers you can’t really keep track of reputation). Expected gain to Alice: $ 5 revenue per lemon minus $ 1 shipping/store costs = $ 4. Expected cost to society: $ 5 revenue minus $ 1 costs minus $ 5 wasted money from customer = -$ 1. Alice sells the lemons.

- Should Bob donate $ 1000 to Bitcoin development? Expected gain to society: $ 10 * 100000 people – $ 1000 = $ 999000, expected gain to Bob: $ 10 – $ 1000 = -$ 990, so Bob does not donate.

- Charlie found someone else’s wallet, containing $ 500. Should he return it? Expected gain to society: $ 500 (to recipient) – $ 500 (Charlie’s loss) + $ 50 (intangible gain to society from everyone being able to worry a little less about the safety of their wallets). Expected gain to Charlie: -$ 500, so he keeps the wallet.

- Should David cut costs in his factory by dumping toxic waste into a river? Expected gain to society: $ 1000 savings minus $ 10 average increased medical costs * 100000 people = -$ 999000, expected gain to David: $ 1000 – $ 10 = $ 990, so David pollutes.

- Eve developed a cure for a type of cancer which costs $ 500 per unit to produce. She can sell it for $ 1000, allowing 50,000 cancer patients to afford it, or for $ 10000, allowing 25,000 cancer patients to afford it. Should she sell at the higher price? Expected gain to society: -25,000 lives (including Alice’s profit, which cancels’ out the wealthier buyers’ losses). Expected gain to Eve: $ 237.5 million profit instead of $ 25 million = $ 212.5 million, so Eve charges the higher price.

Of course, in many of these cases, people sometimes act morally and cooperate, even though it reduces their personal situation. But why do they do this? We were produced by evolution, which is generally a rather selfish optimizer. There are many explanations. One, and the one we will focus on, involves the concept of superrationality.

Superrationality

Consider the following explanation of virtue, courtesy of David Friedman:

Suppose I wish people to believe that I have certain characteristics–that I am honest, kind, helpful to my friends. If I really do have those characteristics, projecting them is easy–I merely do and say what seems natural, without paying much attention to how I appear to outside observers. They will observe my words, my actions, my facial expressions, and draw reasonably accurate conclusions.

Suppose, however, that I do not have those characteristics. I am not (for example) honest. I usually act honestly because acting honestly is usually in my interest, but I am always willing to make an exception if I can gain by doing so. I must now, in many actual decisions, do a double calculation. First, I must decide how to act–whether, for example, this is a good opportunity to steal and not be caught. Second, I must decide how I would be thinking and acting, what expressions would be going across my face, whether I would be feeling happy or sad, if I really were the person I am pretending to be.

If you require a computer to do twice as many calculations, it slows down. So does a human. Most of us are not very good liars.

If this argument is correct, it implies that I may be better off in narrowly material terms–have, for instance, a higher income–if I am really honest (and kind and …) than if I am only pretending to be, simply because real virtues are more convincing than pretend ones. It follows that, if I were a narrowly selfish individual, I might, for purely selfish reasons, want to make myself a better person–more virtuous in those ways that others value.

The final stage in the argument is to observe that we can be made better–by ourselves, by our parents, perhaps even by our genes. People can and do try to train themselves into good habits–including the habits of automatically telling the truth, not stealing, and being kind to their friends. With enough training, such habits become tastes–doing “bad” things makes one uncomfortable, even if nobody is watching, so one does not do them. After a while, one does not even have to decide not to do them. You might describe the process as synthesizing a conscience.

Essentially, it is cognitively hard to convincingly fake being virtuous while being greedy whenever you can get away with it, and so it makes more sense for you to actually be virtuous. Much ancient philosophy follows similar reasoning, seeing virtue as a cultivated habit; David Friedman simply did us the customary service of an economist and converted the intuition into more easily analyzable formalisms. Now, let us compress this formalism even further. In short, the key point here is that humans are leaky agents – with every second of our action, we essentially indirectly expose parts of our source code. If we are actually planning to be nice, we act one way, and if we are only pretending to be nice while actually intending to strike as soon as our friends are vulnerable, we act differently, and others can often notice.

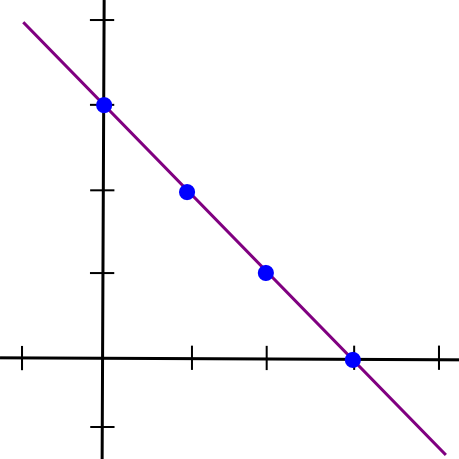

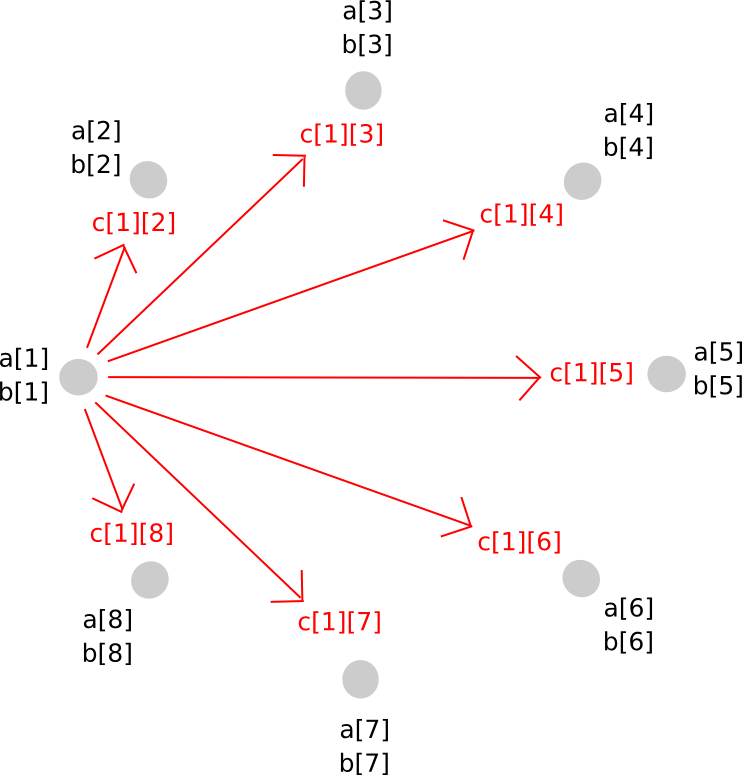

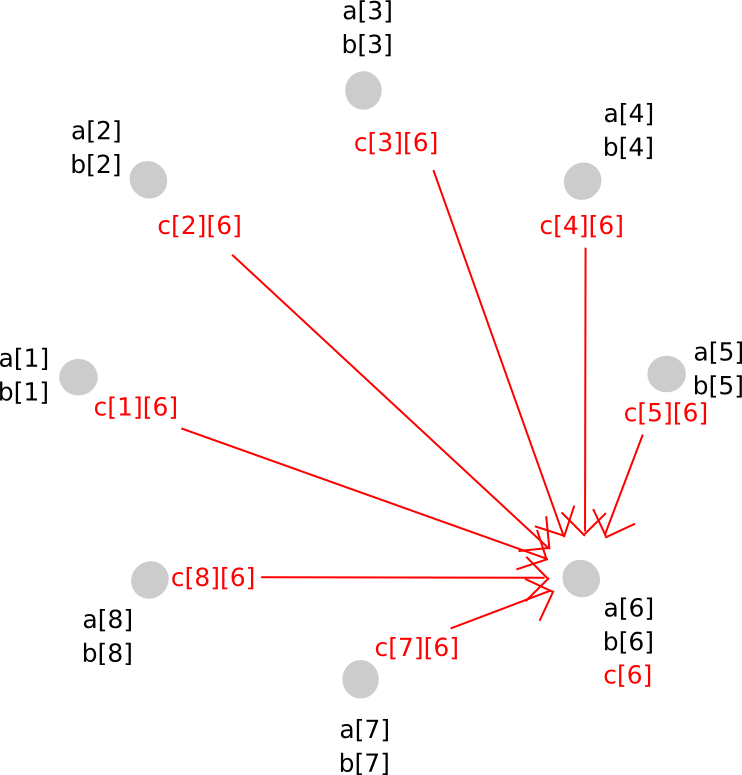

This might seem like a disadvantage; however, it allows a kind of cooperation that was not possible with the simple game-theoretic agents described above. Suppose that two agents, A and B, each have the ability to “read” whether or not the other is “virtuous” to some degree of accuracy, and are playing a symmetric Prisoner’s Dilemma. In this case, the agents can adopt the following strategy, which we assume to be a virtuous strategy:

- Try to determine if the other party is virtuous.

- If the other party is virtuous, cooperate.

- If the other party is not virtuous, defect.

If two virtuous agents come into contact with each other, both will cooperate, and get a larger reward. If a virtuous agent comes into contact with a non-virtuous agent, the virtuous agent will defect. Hence, in all cases, the virtuous agent does at least as well as the non-virtuous agent, and often better. This is the essence of superrationality.

As contrived as this strategy seems, human cultures have some deeply ingrained mechanisms for implementing it, particularly relating to mistrusting agents who try hard to make themselves less readable – see the common adage that you should never trust someone who doesn’t drink. Of course, there is a class of individuals who can convincingly pretend to be friendly while actually planning to defect at every moment – these are called sociopaths, and they are perhaps the primary defect of this system when implemented by humans.

Centralized Manual Organizations…

This kind of superrational cooperation has been arguably an important bedrock of human cooperation for the last ten thousand years, allowing people to be honest to each other even in those cases where simple market incentives might instead drive defection. However, perhaps one of the main unfortunate byproducts of the modern birth of large centralized organizations is that they allow people to effectively cheat others’ ability to read their minds, making this kind of cooperation more difficult.

Most people in modern civilization have benefited quite handsomely, and have also indirectly financed, at least some instance of someone in some third world country dumping toxic waste into a river to build products more cheaply for them; however, we do not even realize that we are indirectly participating in such defection; corporations do the dirty work for us. The market is so powerful that it can arbitrage even our own morality, placing the most dirty and unsavory tasks in the hands of those individuals who are willing to absorb their conscience at lowest cost and effectively hiding it from everyone else. The corporations themselves are perfectly able to have a smiley face produced as their public image by their marketing departments, leaving it to a completely different department to sweet-talk potential customers. This second department may not even know that the department producing the product is any less virtuous and sweet than they are.

The internet has often been hailed as a solution to many of these organizational and political problems, and indeed it does do a great job of reducing information asymmetries and offering transparency. However, as far as the decreasing viability of superrational cooperation goes, it can also sometimes make things even worse. Online, we are much less “leaky” even as individuals, and so once again it is easier to appear virtuous while actually intending to cheat. This is part of the reason why scams online and in the cryptocurrency space are more common than offline, and is perhaps one of the primary arguments against moving all economic interaction to the internet a la cryptoanarchism (the other argument being that cryptoanarchism removes the ability to inflict unboundedly large punishments, weakening the strength of a large class of economic mechanisms).

A much greater degree of transparency, arguably, offers a solution. Individuals are moderately leaky, current centralized organizations are less leaky, but organizations where randomly information is constantly being released to the world left, right and center are even more leaky than individuals are. Imagine a world where if you start even thinking about how you will cheat your friend, business partner or spouse, there is a 1% chance that the left part of your hippocampus will rebel and send a full recording of your thoughts to your intended victim in exchange for a $ 7500 reward. That is what it “feels” like to be the management board of a leaky organization.

This is essentially a restatement of the founding ideology behind Wikileaks, and more recently an incentivized Wikileaks alternative, slur.io came out to push the envelope further. However, Wikileaks exists, and yet shadowy centralized organizations also continue to still exist and are in many cases still quite shadowy. Perhaps incentivization, coupled with prediction-like-mechanisms for people to profit from outing their employers’ misdeeds, is what will open the floodgates for greater transparency, but at the same time we can also take a different route: offer a way for organizations to make themselves voluntarily, and radically, leaky and superrational to an extent never seen before.

… and DAOs

Decentralized autonomous organizations, as a concept, are unique in that their governance algorithms are not just leaky, but actually completely public. That is, while with even transparent centralized organizations outsiders can get a rough idea of what the organization’s temperament is, with a DAO outsiders can actually see the organization’s entire source code. Now, they do not see the “source code” of the humans that are behind the DAO, but there are ways to write a DAO’s source code so that it is heavily biased toward a particular objective regardless of who its participants are. A futarchy maximizing the average human lifespan will act very differently from a futarchy maximizing the production of paperclips, even if the exact same people are running it. Hence, not only is it the case that the organization will make it obvious to everyone if they start to cheat, but rather it’s not even possible for the organization’s “mind” to cheat.

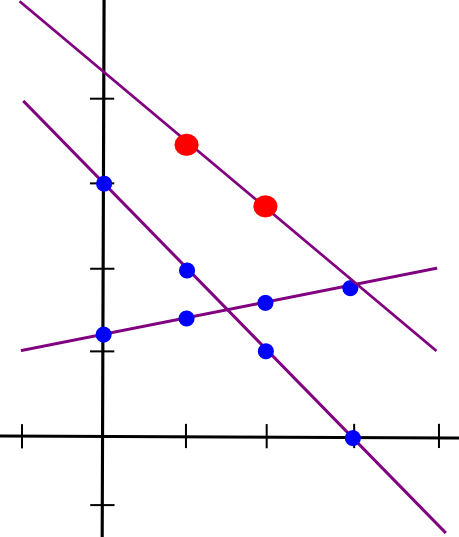

Now, what would superrational cooperation using DAOs look like? First, we would need to see some DAOs actually appear. There are a few use-cases where it seems not too far-fetched to expect them to succeed: gambling, stablecoins, decentralized file storage, one-ID-per-person data provision, SchellingCoin, etc. However, we can call these DAOs type I DAOs: they have some internal state, but little autonomous governance. They cannot ever do anything but perhaps adjust a few of their own parameters to maximize some utility metric via PID controllers, simulated annealing or other simple optimization algorithms. Hence, they are in a weak sense superrational, but they are also rather limited and stupid, and so they will often rely on being upgraded by an external process which is not superrational at all.

In order to go further, we need type II DAOs: DAOs with a governance algorithm capable of making theoretically arbitrary decisions. Futarchy, various forms of democracy, and various forms of subjective extra-protocol governance (ie. in case of substantial disagreement, DAO clones itself into multiple parts with one part for each proposed policy, and everyone chooses which version to interact with) are the only ones we are currently aware of, though other fundamental approaches and clever combinations of these will likely continue to appear. Once DAOs can make arbitrary decisions, then they will be able to not only engage in superrational commerce with their human customers, but also potentially with each other.

What kinds of market failures can superrational cooperation solve that plain old regular cooperation cannot? Public goods problems may unfortunately be outside the scope; none of the mechanisms described here solve the massively-multiparty incentivization problem. In this model, the reason why organizations make themselves decentralized/leaky is so that others will trust them more, and so organizations that fail to do this will be excluded from the economic benefits of this “circle of trust”. With public goods, the whole problem is that there is no way to exclude anyone from benefiting, so the strategy fails. However, anything related to information asymmetries falls squarely within the scope, and this scope is large indeed; as society becomes more and more complex, cheating will in many ways become progressively easier and easier to do and harder to police or even understand; the modern financial system is just one example. Perhaps the true promise of DAOs, if there is any promise at all, is precisely to help with this.

The post Superrationality and DAOs appeared first on .